The Purpose of this Blog is to Provide guidance for the industry on the key concepts of

Computer Software Assurance and provide direction to apply rational and critical thinking and

commensurate it with risk associated with product quality and patient safety.

This document takes into consideration challenges with the current computerized system validation

approach and proposes methods to overcome the obstacles through the Computer Software

Assurance.

Over the last decade, innovation and technology has emerged with tremendous speed and brought

the industrial revolution (Industry 4.0) through smart manufacturing and automations. The

Pharmaceutical industry has adopted most of the technological innovations such as Artificial

Intelligence and Machine learning, Big Data & Analytics, cloud computing, Robotic Process

Automation, 3D Printing, Virtual Reality and Augmented Reality, IoT(Internet of Things) and Tele

radiology, etc. which is now known as Pharma 4.0 (refer our blog on Industry 4.0 for more details).

Computerized System Validation traditional approach delay the validation of Computerized system and industries Deterrent to pursuing automation due to burdensome approach of traditional methodology.

Industry Common CSV Pain Points

USFDA and Industry working group of Computer System Assurance (CSA) identified the common

pain points of the industry in implementation of new technologies with traditional CSV methodology.

|

Sr. No. |

Barrier |

Description |

|

1 |

Deterrent to pursuing automation |

The volume of documentation and complex process of computerized system validation deter the rate of investment (ROI) on implementation of new technologies and automation. |

|

2 |

Gathering evidence for auditors |

The lack of knowledge and understanding on the regulatory expectations on CSV forced the industry to collect the evidence for each function in the computer system beyond the intended scope to please the auditors. This process of gathering the evidence doubles the CSV process implementation time. |

|

3 |

Duplication of vendor efforts at client sites |

The failure in exploring the product and supplier maturity and inexperience in communication with vendor results in customer to repeat the activities, during implementation of computerized systems onsite. |

|

4 |

Burdensome and complex Risk Assessments |

The traditional risk assessments are applied beyond the scope of intended requirements, shifting the focus to unintended mitigations, burdensome testing, and implementation of unnecessary controls. |

|

5 |

Testing documentation and errors |

It is observed that high number of Defects/deviations in the testing occurs due to the test script errors and the time spent on correction and resolution of these errors does not add any additional value to actual computer system. |

|

6 |

Numerous Post- Go Live Issues |

Despite spending huge amount of time on creation of validation documentation and testing, numerous Post-Go live issues are observed. |

New Approach to Validation

Automation :

USFDA supports and encourages automation as it has the potential to improve

productivity and efficiency, help in tracking and trending, plus a host of other benefits.

Manufacturers can gain advantages from automation throughout the entire product lifecycle. They can reduce or eliminate human errors, optimize resources, and reduce patient risk. USFDA’s position is that using these software products can be an excellent way to enhance product quality and patient safety, which in the end, is the overarching goal.

Changing Paradigm :

Current industry practice as part of CSV program is documentation heavy. Documentation is done at the expense of critical thinking and testing. CSA brings paradigm shift in this approach by encouraging critical thinking over documentation. By using CSA concepts, companies can execute more testing with less documentation based on risk associated with requirement.

LEVERAGE VENDOR DOCUMENTATION: Perform vendor assessment and based on outcome

leverage vendor executed testing during designing the validation strategy of the product. If the

vendor demonstrates, a strong QMS then the validation strategy can be optimized to validate the

delta and high-risk scenarios.

RISK RATING: CSA recommends the following streamlined risk assessment process, which aims to

perform risk-based testing at requirement level (refer below section for more details)

This simplified approach includes only two variables:

• Requirement’s potential impact on product quality and patient safety.

• Implementation method of the Requirement.

UNSCRIPTED TESTING: Unscripted testing liberates a tester from following click-by-click level test script and allows the tester to conduct free-form testing and documenting the results. Unscripted

testing includes Ad-hoc and Exploratory Testing (refer section 8 for more details).

Computer System Assurance Key Drivers

Regulatory & Industry Initiative:

CSA is a collaborative effort to address the issues, developing a joint understanding and providing a path forward, which is efficient & meets the goals of all stakeholder in the pharmaceutical industry. The biggest beneficiary being the patient.

Clarification from Regulators:

The Regulators have provided clarification & guidance in many aspects of software qualification. Many areas are now clearly understood. The legacy understanding of the process which was inefficient by the industry now needs to be changed as per the new clarification & guidance to make the process efficient.

Appropriate Records:

One of the key drivers of CSA is the appropriate level of testing & supporting records. FDA has clarified that it does not expect huge documents for test execution; appropriate level of test & supporting documents should be created as required. Appropriate level of testing should be performed based on Risk assessed for the computer system functionality.

Optimized Efforts:

The CSA necessarily may or may not bring down the time taken to validate but it

will optimize the effort of validation to invest time and resources for better quality.

Pilot studies have shown desired results: The CSA process as discussed and agreed by the

stakeholders has been executed at a pilot level and the results are encouraging in line with the

expectations.

Supports Digitalization Drive:

CSA approach encourages the use of automation tools for the qualification activities. Many products are available in the market & these tools make the process of record capturing efficient but also furthers the company’s digitization efforts.

CSA Risk Management Approach

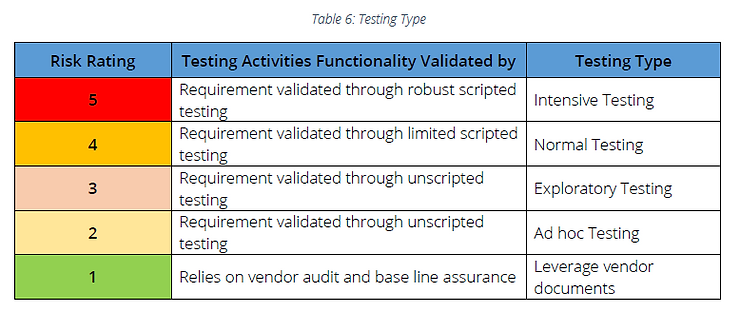

CSA approach recommends specific testing types for each risk rating. Detailed step wise process is

explained below. Risk Rating template has been provided in below section which can be used.

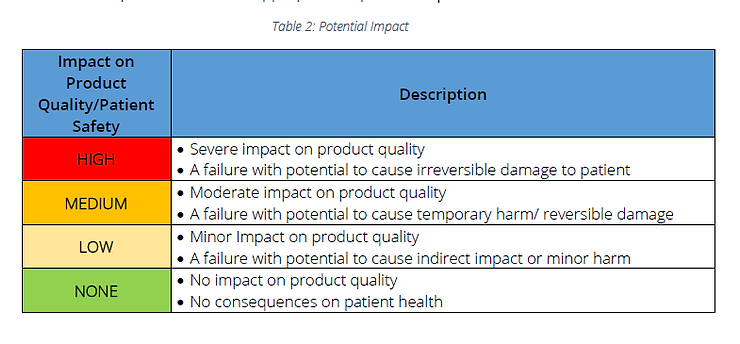

Step 1: Determine potential impact on product quality and patient safety from functionality failure

for each user requirement point. This should be done by a group of SMEs involved in the project &

should have representation from appropriate departments.

Step 2: Determine the functionality’s Implementation method for each requirement point.

Table 3: Implementation Method

Step 3: Determine functionality’s Risk Rating, based on the product quality/patient safety & Implementation method for each requirement.

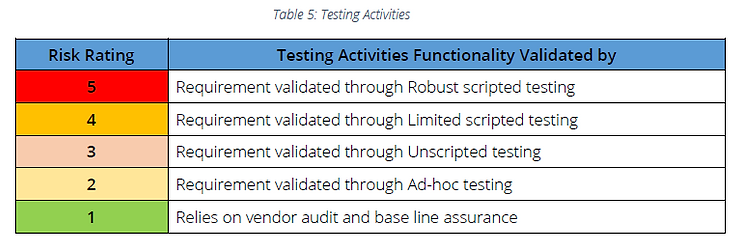

Step 4: Follow recommended testing activities

Types of Testing

The main intent of CSA is to shift focus from more documentation to testing of software and early

detection of system issues especially those having impact to product quality and patient safety. In

this regard, CSA suggests executing following different types of testing during system validation:

A. Testing Types

Specific types of testing will be required based upon the requirements risk rating (impact and

implementation method of each requirement):

Intensive Testing:

includes normal testing and in addition challenges the system’s ability with respect to various factors as below.

-

Repeatability Testing challenges the system’s ability to repeatedly do what it should.

-

Performance Testing challenges the system’s ability to do what it should as fast and effectively as it should, according to specifications.

-

Volume/Load Testing challenges the system’s ability to manage high loads as it should. Volume/Load testing is required when system resources are critical.

-

Structural/Path Testing challenges a computerized system’s internal structure by exercising detailed program code.

-

Regression Testing challenges the system’s ability to still do what it should after being modified according to specified requirements, and also verifies that portions of the computerized system not involved in the change were not adversely affected.

Normal Testing

Normal Testing covers Positive Testing and Negative Testing. This type of testing challenges the

system’s ability to do what it should do according to specifications and prevent what it should

not do according to specifications.

Exploratory Testing

Exploratory Testing is unscripted testing. Tester will test the system to achieve the defined goal

and will use critical thinking, common software behaviors and types of failures

Ad hoc Testing

Ad hoc Testing is an unscripted testing performed without any planning or pre-defined

documentation and will be done based on experience and knowledge of the system by SMEs.

B. Recommended testing activities

Note: If vendor management is not in place, requirements determined as Risk Rating 1 should be considered as Risk Rating 2 and Ad-hoc testing can be followed

Assurance approach and acceptable record of results

Following table explains the assurance approach as per the risk rating and the acceptable forms of

evidence.

|

Assurance Approach |

Test Plan |

Test Results |

Testing Evidence |

|

Intensive Testing (Scripted) |

Test objectives • Detailed test cases (Step by step) Expected results |

• Pass/fail for test case • Details regarding any defects/ deviations found and their disposition |

• Detailed report of assurance activity • Result for each test case – only indication of pass/fail • A screen capture or other printed evidence that makes clear the result of execution • Defects found and disposition • Conclusion statement • Tester name and date of testing |

|

Normal Testing (Scripted) |

• Limited test cases (Step by step) • Expected results |

• Pass/fail for test case • Details regarding any defects/ deviations found and their disposition |

• Detailed report of assurance activity • Result for each test case – only indication of pass/fail • A screen capture or other printed evidence that makes clear the result of execution • Defects found and disposition • Conclusion statement • Tester name and date of testing • Signature and date of appropriate signatory authority |

|

Exploratory Testing (Unscripted) |

• Establishing high level goals to meet requirements • (Step by step procedure not required) |

• Pass/fail for test case • Details regarding any failures/ deviations found |

• Summary description of features and functions tested. • Result for each test plan objective –only indication of pass/fail • Defects found and disposition • Conclusion statement • Tester name and date of testing • Additional evidence such as screen shots or detailed recording of actual outcomes during testing is not required for systems having an audit trail facility |

|

Ad-hoc Testing(Unscripted) |

• Testing of features and functions without any test goal(Plan) |

• Details regarding any failures/ deviations found |

• Summary description of features and functions tested • Defects found and disposition • Conclusion statement • Tester name and date of testing • Additional evidence such as screen shots or reports not required if systems has Audit trail functionality. |

Note1: Above testing strategies can be adopted for Installation Qualification, Operational Qualification and performance qualification or PQ can be done following limited scripted testing approach, it is important that the adopted approach is documented by the organization in Project Validation plan or SOP.

Note2: If new change is having additional requirements, testing strategy shall be determined based risk rating approach

Risk Rating template

|

Sr. No. |

Requirement Description |

Implementation Method |

Implementation Method |

Risk Rating |

Test Specification |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|